Uncovering Bias in AI's Role Inside the Exam Room

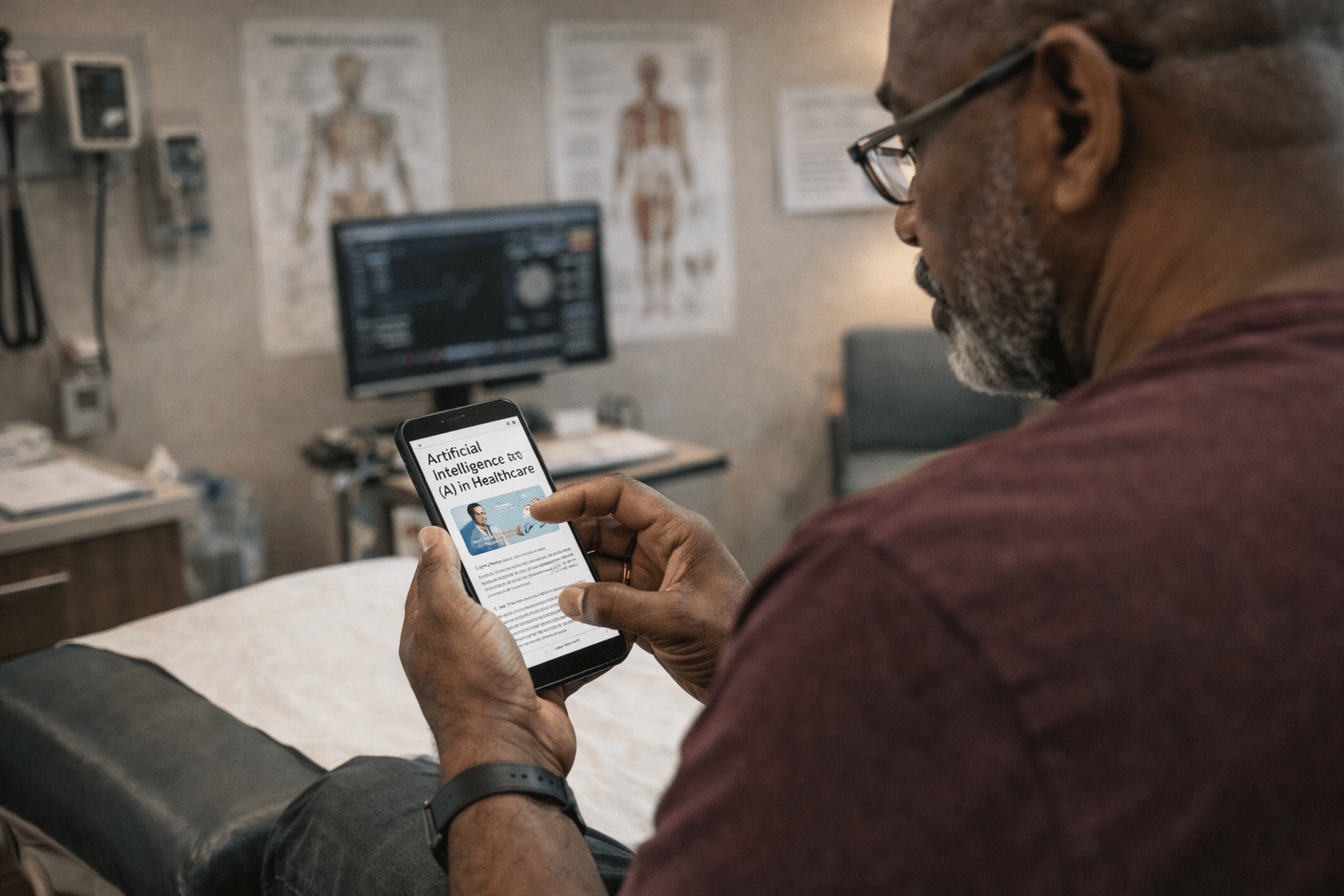

Artificial intelligence is already integrated into various aspects of the medical field, such as exam rooms, hospitals, insurance systems, and telehealth platforms. AI tools assist in tasks like drafting clinical notes, identifying high-risk patients, suggesting diagnoses, predicting hospital readmissions, and recommending treatment plans.

However, concerns are growing about the potential for bias in healthcare AI systems. If these systems are trained on biased healthcare data or lack transparency, they could exacerbate existing inequalities rather than reduce them.

Recognizing Bias in Healthcare AI

When discussing "algorithmic bias," the real-world impact in healthcare AI can be seen in various scenarios:

- A risk prediction tool may prioritize follow-up care for Black patients lower, using past healthcare spending data rather than actual illness severity.

- A symptom-checking AI may underestimate complaints from Black patients due to training data showing under-documentation patterns.

- Language models may downplay patient concerns about pain or emotional distress.

- An automated triage system could refer white patients to specialists more frequently than Black patients with similar clinical profiles.

These biases are not intentional but rather a result of how AI systems learn from historical datasets, which often contain systemic biases and inequalities.

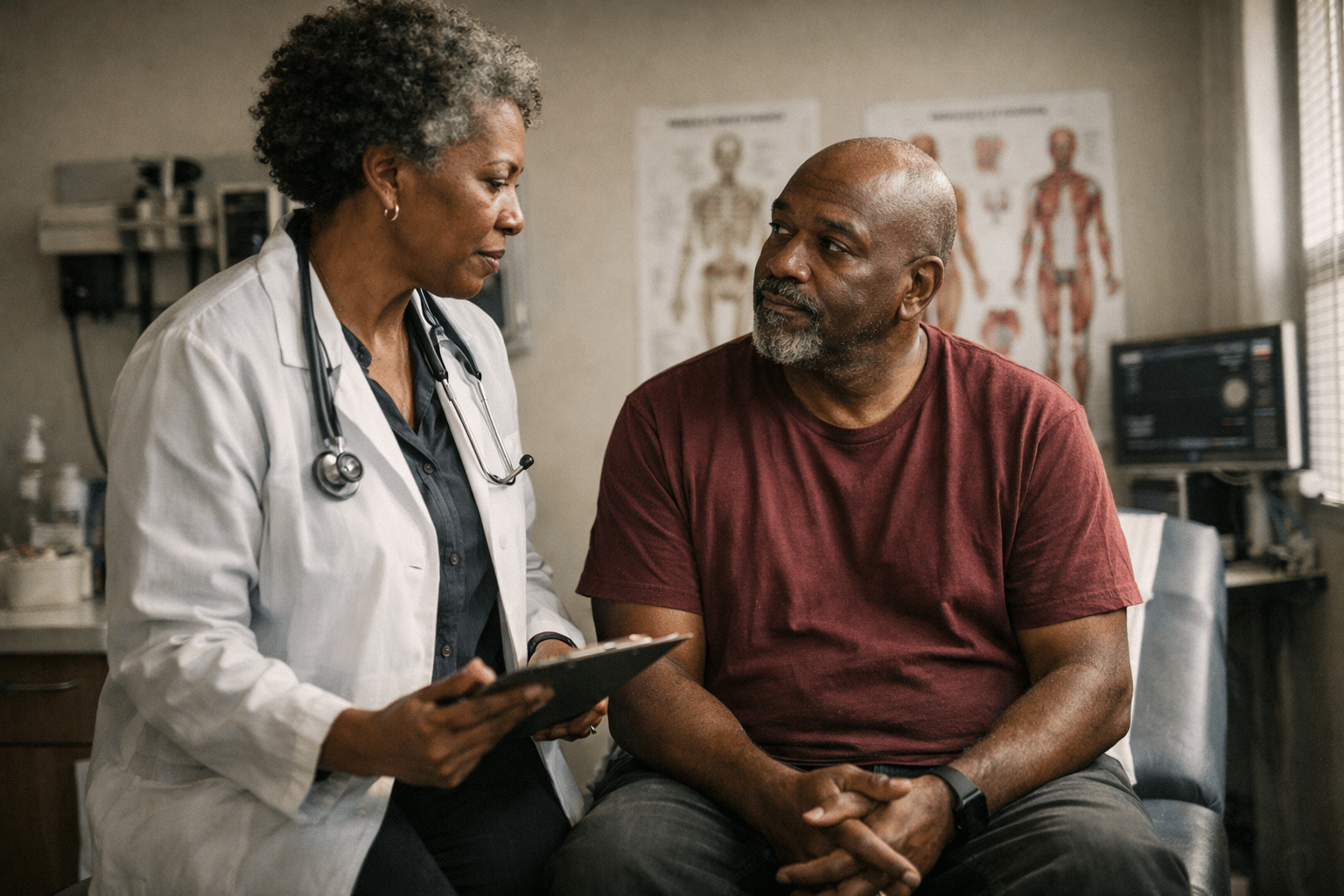

The Importance of Transparency

Transparency is crucial in healthcare AI to address concerns related to bias. Many AI systems lack transparency in disclosing their training dataset demographics, performance variations across racial groups, and operate as proprietary "black boxes," hindering external auditing.

Without transparency, it is challenging to ensure that AI tools perform equitably across different populations. For Black patients, this opacity raises valid questions about validation, performance, and bias auditing of AI algorithms.

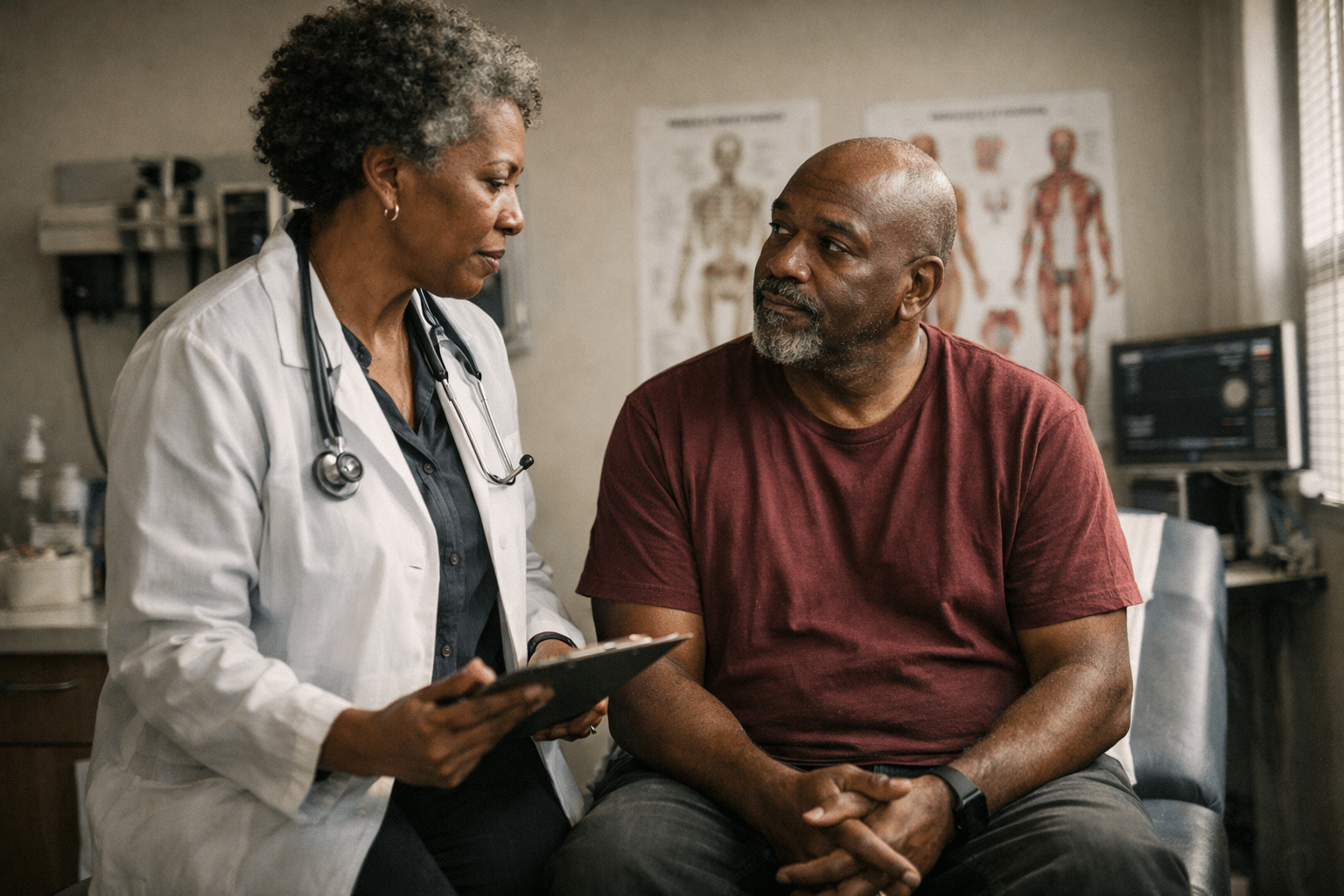

Real-World Impact on Black Patients

- Without addressing bias, AI in healthcare could worsen disparities by affecting mis-triage, under-treatment, and potentially harmful recommendations for Black patients.

- Patients and clinicians have the right to demand clarity, accountability, and oversight in the use of AI tools in healthcare settings.

- Policymakers need to implement regulations that require transparency, equity audits, reporting adverse outcomes, and clear liability frameworks for AI in healthcare.